pymovements in 10 minutes#

What you will learn in this tutorial:#

how to download one of the publicly available datasets

how to load a subset of the data into your memory

how to transform pixel coordinates into degrees of visual angle

how to transform positional data into velocity data

how to detect fixations by using the I-VT algorithm

how to detect saccades by using the microsaccades algorithm

how to compute additional event properties for your analysis

how to save your preprocessed data

how to plot the main saccadic sequence from your data

Downloading one of the public datasets#

We import pymovements as the alias pm for convenience.

[1]:

import polars as pl

import pymovements as pm

/home/docs/checkouts/readthedocs.org/user_builds/pymovements/envs/stable/lib/python3.9/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

pymovements provides a library of publicly available datasets.

You can browse through the available dataset definitions here: Datasets

For this tutorial we will limit ourselves to the ToyDataset due to its minimal space requirements.

Other datasets can be downloaded by simply replacing ToyDataset with one of the other available datasets.

We can initialize and download by passing the desired dataset name as a string argument.

Additionally we need the root directory path of your data.

[2]:

dataset = pm.Dataset('ToyDataset', path='data/ToyDataset')

dataset.download()

Downloading http://github.com/aeye-lab/pymovements-toy-dataset/zipball/6cb5d663317bf418cec0c9abe1dde5085a8a8ebd/ to data/ToyDataset/downloads/pymovements-toy-dataset.zip

pymovements-toy-dataset.zip: 100%|██████████| 3.06M/3.06M [00:00<00:00, 25.5MB/s]

Checking integrity of pymovements-toy-dataset.zip

Extracting pymovements-toy-dataset.zip to data/ToyDataset/raw

[2]:

<pymovements.dataset.dataset.Dataset at 0x7fa7f422c490>

Our downloaded dataset will be placed in new a directory with the name of the dataset:

[3]:

dataset.path

[3]:

PosixPath('data/ToyDataset')

Archive files are automatically extracted into the path specified by Dataset.paths.raw:

[4]:

dataset.paths.raw

[4]:

PosixPath('data/ToyDataset/raw')

Loading in your data into memory#

Next we load our dataset into memory to be able to work with it:

[5]:

dataset.load()

100%|██████████| 20/20 [00:00<00:00, 20.35it/s]

[5]:

<pymovements.dataset.dataset.Dataset at 0x7fa7f422c490>

This way we fill two attributes with data. First we have the fileinfo attribute which holds all the basic information for files:

[6]:

dataset.fileinfo.head()

[6]:

| text_id | page_id | filepath |

|---|---|---|

| i64 | i64 | str |

| 0 | 1 | "aeye-lab-pymov… |

| 0 | 2 | "aeye-lab-pymov… |

| 0 | 3 | "aeye-lab-pymov… |

| 0 | 4 | "aeye-lab-pymov… |

| 0 | 5 | "aeye-lab-pymov… |

We notice that for each filepath a text_id and page_id is specified.

We have also loaded our gaze data into the dataframes in the gaze attribute:

[7]:

dataset.gaze[0].frame.head()

[7]:

| time | stimuli_x | stimuli_y | text_id | page_id | pixel |

|---|---|---|---|---|---|

| f32 | f32 | f32 | i64 | i64 | list[f32] |

| 1.988145e6 | -1.0 | -1.0 | 0 | 1 | [206.800003, 152.399994] |

| 1.988146e6 | -1.0 | -1.0 | 0 | 1 | [206.899994, 152.100006] |

| 1.988147e6 | -1.0 | -1.0 | 0 | 1 | [207.0, 151.800003] |

| 1.988148e6 | -1.0 | -1.0 | 0 | 1 | [207.100006, 151.699997] |

| 1.988149e6 | -1.0 | -1.0 | 0 | 1 | [207.0, 151.5] |

Apart from some trial identifier columns we see the columns time and pixel.

The last two columns refer to the pixel coordinates at the timestep specified by time.

We are also able to just take a subset of the data by specifying values of the fileinfo columns. The key refers to the column in the fileinfo dataframe. The values in the dictionary can be of type bool, int, float or str, but also lists and ranges

[8]:

subset = {

'text_id': 0,

'page_id': [0, 1],

}

dataset.load(subset=subset)

dataset.fileinfo

100%|██████████| 1/1 [00:00<00:00, 33.57it/s]

[8]:

| text_id | page_id | filepath |

|---|---|---|

| i64 | i64 | str |

| 0 | 1 | "aeye-lab-pymov… |

Now we selected only a small subset of our data.

Preprocessing raw gaze data#

We now want to preprocess our gaze data by transforming pixel coordinates into degrees of visual angle and then computing velocity data from our positional data.

[9]:

dataset.pix2deg()

dataset.gaze[0].frame.head()

100%|██████████| 1/1 [00:00<00:00, 17.65it/s]

[9]:

| time | stimuli_x | stimuli_y | text_id | page_id | pixel | position |

|---|---|---|---|---|---|---|

| f32 | f32 | f32 | i64 | i64 | list[f32] | list[f32] |

| 1.988145e6 | -1.0 | -1.0 | 0 | 1 | [206.800003, 152.399994] | [-10.697598, -8.8524] |

| 1.988146e6 | -1.0 | -1.0 | 0 | 1 | [206.899994, 152.100006] | [-10.695184, -8.859678] |

| 1.988147e6 | -1.0 | -1.0 | 0 | 1 | [207.0, 151.800003] | [-10.692768, -8.866957] |

| 1.988148e6 | -1.0 | -1.0 | 0 | 1 | [207.100006, 151.699997] | [-10.690351, -8.869382] |

| 1.988149e6 | -1.0 | -1.0 | 0 | 1 | [207.0, 151.5] | [-10.692768, -8.874233] |

We notice that a new column has appeared: position. This column specifies the position coordinates in degrees of visual angle (dva).

For transforming our positional data into velocity data we will use the Savitzky-Golay differentiation filter.

We can also specify some additional parameters for this method:

[10]:

dataset.pos2vel(method='savitzky_golay', degree=2, window_length=7)

dataset.gaze[0].frame.head()

100%|██████████| 1/1 [00:00<00:00, 31.49it/s]

[10]:

| time | stimuli_x | stimuli_y | text_id | page_id | pixel | position | velocity |

|---|---|---|---|---|---|---|---|

| f32 | f32 | f32 | i64 | i64 | list[f32] | list[f32] | list[f32] |

| 1.988145e6 | -1.0 | -1.0 | 0 | 1 | [206.800003, 152.399994] | [-10.697598, -8.8524] | [1.207726, -3.11923] |

| 1.988146e6 | -1.0 | -1.0 | 0 | 1 | [206.899994, 152.100006] | [-10.695184, -8.859678] | [1.207692, -4.072189] |

| 1.988147e6 | -1.0 | -1.0 | 0 | 1 | [207.0, 151.800003] | [-10.692768, -8.866957] | [1.035145, -4.765272] |

| 1.988148e6 | -1.0 | -1.0 | 0 | 1 | [207.100006, 151.699997] | [-10.690351, -8.869382] | [1.207726, -4.245451] |

| 1.988149e6 | -1.0 | -1.0 | 0 | 1 | [207.0, 151.5] | [-10.692768, -8.874233] | [1.552786, -2.339193] |

There is also the more general apply() method, which can be used to apply both transformation and event detection methods.

[11]:

dataset.apply('pos2acc', degree=2, window_length=7)

dataset.gaze[0].frame.head()

100%|██████████| 1/1 [00:00<00:00, 28.42it/s]

[11]:

| time | stimuli_x | stimuli_y | text_id | page_id | pixel | position | velocity | acceleration |

|---|---|---|---|---|---|---|---|---|

| f32 | f32 | f32 | i64 | i64 | list[f32] | list[f32] | list[f32] | list[f32] |

| 1.988145e6 | -1.0 | -1.0 | 0 | 1 | [206.800003, 152.399994] | [-10.697598, -8.8524] | [1.207726, -3.11923] | [690.255859, -1501.787231] |

| 1.988146e6 | -1.0 | -1.0 | 0 | 1 | [206.899994, 152.100006] | [-10.695184, -8.859678] | [1.207692, -4.072189] | [0.045413, -866.25415] |

| 1.988147e6 | -1.0 | -1.0 | 0 | 1 | [207.0, 151.800003] | [-10.692768, -8.866957] | [1.035145, -4.765272] | [-575.111023, -57.606468] |

| 1.988148e6 | -1.0 | -1.0 | 0 | 1 | [207.100006, 151.699997] | [-10.690351, -8.869382] | [1.207726, -4.245451] | [-230.039871, 1328.491089] |

| 1.988149e6 | -1.0 | -1.0 | 0 | 1 | [207.0, 151.5] | [-10.692768, -8.874233] | [1.552786, -2.339193] | [690.006104, 2021.630615] |

Detecting events#

Now let’s detect some events.

First we will detect fixations using the I-VT algorithm using its default parameters:

[12]:

dataset.detect_events('ivt')

dataset.events[0].frame.head()

1it [00:00, 46.89it/s]

[12]:

| text_id | page_id | name | onset | offset | duration |

|---|---|---|---|---|---|

| i64 | i64 | str | i64 | i64 | i64 |

| 0 | 1 | "fixation" | 1988145 | 1988322 | 177 |

| 0 | 1 | "fixation" | 1988351 | 1988546 | 195 |

| 0 | 1 | "fixation" | 1988592 | 1988736 | 144 |

| 0 | 1 | "fixation" | 1988788 | 1989012 | 224 |

| 0 | 1 | "fixation" | 1989044 | 1989170 | 126 |

Next we detect some saccades. This time we don’t use the default parameters but specify our own:

[13]:

dataset.detect_events('microsaccades', minimum_duration=8)

dataset.events[0].frame.filter(pl.col('name') == 'saccade').head()

1it [00:00, 36.79it/s]

[13]:

| text_id | page_id | name | onset | offset | duration |

|---|---|---|---|---|---|

| i64 | i64 | str | i64 | i64 | i64 |

| 0 | 1 | "saccade" | 1988323 | 1988337 | 14 |

| 0 | 1 | "saccade" | 1988341 | 1988351 | 10 |

| 0 | 1 | "saccade" | 1988546 | 1988567 | 21 |

| 0 | 1 | "saccade" | 1988570 | 1988583 | 13 |

| 0 | 1 | "saccade" | 1988736 | 1988760 | 24 |

We can also use the more general interface of the apply() method:

[14]:

dataset.apply('idt', dispersion_threshold=2.7, name='fixation.ivt')

dataset.events[0].frame.filter(pl.col('name') == 'fixation.ivt').head()

100%|██████████| 1/1 [00:00<00:00, 1.95it/s]

[14]:

| text_id | page_id | name | onset | offset | duration |

|---|---|---|---|---|---|

| i64 | i64 | str | i64 | i64 | i64 |

| 0 | 1 | "fixation.ivt" | 1988145 | 1988563 | 418 |

| 0 | 1 | "fixation.ivt" | 1988564 | 1988750 | 186 |

| 0 | 1 | "fixation.ivt" | 1988751 | 1989178 | 427 |

| 0 | 1 | "fixation.ivt" | 1989179 | 1989436 | 257 |

| 0 | 1 | "fixation.ivt" | 1989437 | 1989600 | 163 |

Computing event properties#

The event dataframe currently only holds the name, onset, offset and duration of an event (additionally we have some more identifier columns at the beginning).

We now want to compute some additional properties for each event. Event properties are things like peak velocity, amplitude and dispersion during an event.

We start out with computing the dispersion:

[15]:

dataset.compute_event_properties("dispersion")

dataset.events[0].frame.head()

1it [00:07, 7.96s/it]

[15]:

| text_id | page_id | name | onset | offset | duration | dispersion |

|---|---|---|---|---|---|---|

| i64 | i64 | str | i64 | i64 | i64 | f32 |

| 0 | 1 | "fixation" | 1988145 | 1988322 | 177 | 0.154959 |

| 0 | 1 | "fixation" | 1988351 | 1988546 | 195 | 0.291834 |

| 0 | 1 | "fixation" | 1988592 | 1988736 | 144 | 0.296297 |

| 0 | 1 | "fixation" | 1988788 | 1989012 | 224 | 0.271854 |

| 0 | 1 | "fixation" | 1989044 | 1989170 | 126 | 0.349001 |

We notice that a new column with the name dispersion has appeared in the event dataframe.

We can also pass a list of properties to compute all of our desired properties in a single run. Let’s add the amplitude and peak velocity:

[16]:

dataset.compute_event_properties(["amplitude", "peak_velocity"])

dataset.events[0].frame.head()

1it [00:07, 7.86s/it]

[16]:

| text_id | page_id | name | onset | offset | duration | dispersion | amplitude | peak_velocity |

|---|---|---|---|---|---|---|---|---|

| i64 | i64 | str | i64 | i64 | i64 | f32 | f32 | f32 |

| 0 | 1 | "fixation" | 1988145 | 1988322 | 177 | 0.154959 | 0.110074 | 16.241352 |

| 0 | 1 | "fixation" | 1988351 | 1988546 | 195 | 0.291834 | 0.206398 | 18.88537 |

| 0 | 1 | "fixation" | 1988592 | 1988736 | 144 | 0.296297 | 0.209546 | 17.690414 |

| 0 | 1 | "fixation" | 1988788 | 1989012 | 224 | 0.271854 | 0.192719 | 19.130249 |

| 0 | 1 | "fixation" | 1989044 | 1989170 | 126 | 0.349001 | 0.304363 | 18.616142 |

Plotting our data#

pymovements provides a range of plotting functions.

You can browse through the available plotting functions here: Plotting

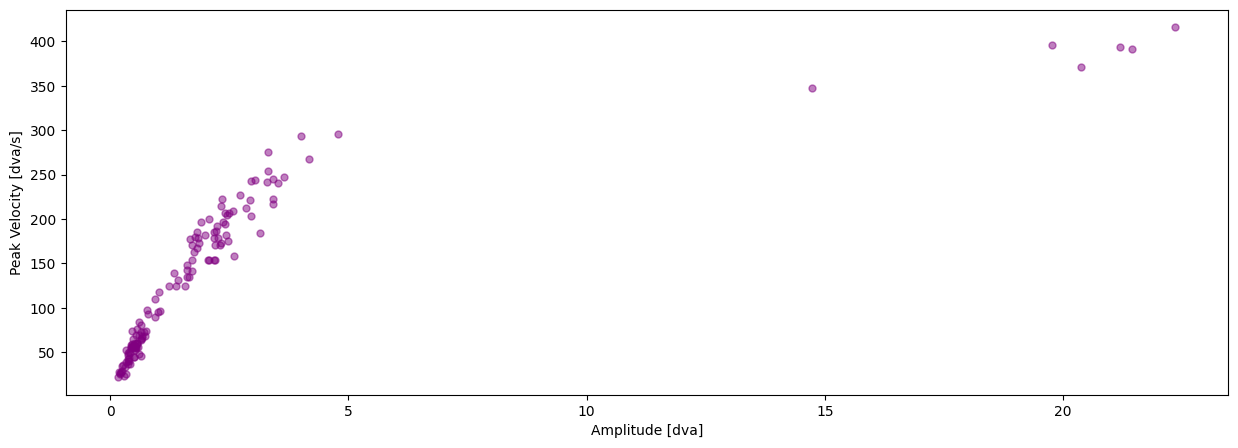

In this this tutorial we will plot the saccadic main sequence of our data.

[17]:

pm.plotting.main_sequence_plot(dataset.events[0])

Saving and loading your dataframes#

If we want to save interim results we can simply use the save() method like this:

[18]:

dataset.save()

100%|██████████| 1/1 [00:00<00:00, 253.46it/s]

100%|██████████| 1/1 [00:00<00:00, 118.69it/s]

[18]:

<pymovements.dataset.dataset.Dataset at 0x7fa7f422c490>

Let’s test this out by initializing a new PublicDataset object in the same directory and loading in the preprocessed gaze and event data.

This time we don’t need to download anything.

[19]:

preprocessed_dataset = pm.Dataset('ToyDataset', path='data/ToyDataset')

dataset.load(events=True, preprocessed=True, subset=subset)

display(dataset.gaze[0])

display(dataset.events[0])

100%|██████████| 1/1 [00:00<00:00, 87.59it/s]

100%|██████████| 1/1 [00:00<00:00, 599.53it/s]

<pymovements.gaze.gaze_dataframe.GazeDataFrame at 0x7fa7b24e0ca0>

<pymovements.events.frame.EventDataFrame at 0x7fa7b250dd00>